What are Large Language Models (LLMs) and what can they do?

LLMs are the underlying category of AI models on which ChatGPT, Bing Chat, Bard, GPT3.5, etc. are based. These are a type of artificial neural network trained on a large corpus of text data. These models use a technique called likelihood-based text completion, which means that they predict the most probable next word or sequence of words given the previous context.

For example, given the context "Amit is writing a" an LLM might predict "email," "song," "LinkedIn post," "love letter" among other options. The model calculates the probability of each possible word(s) and selects the most likely one based on the training data. (P.S.: the respective probabilities in this case are likely to be 0.6, 0.2, 0.8, 0.005 ;))

LLMs use a type of neural network called a transformer, which is designed to handle long-range dependencies in text data. The transformer has multiple layers that process the input text data, each layer refining and adding to the model's understanding of the text.

During training, the LLM is presented with a large dataset of text, such as the text of legal contracts, and the model is trained to predict the next word or sequence of words in the text. The model learns to identify patterns and relationships in the text data, such as the frequency of certain words, phrases, or syntactical structures.

This is the underlying technology on which the some of the recent stunning advanced like ChatGPT, Bing Chat, Bard, etc. are based. So, before we learn how to effectively communicate with an LLM (a topic we'll cover in detail in subsequent posts), lets try to discover how much do LLMs understand about our language.

And, the really big question is, can LLMs really think and reason like humans?

What can LLMs learn about our language and what can they not learn?

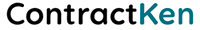

Studies show that what looks like planning and reasoning in LLMs is, in reality, pattern recognition abilities gained from continued exposure to the same sequence of events and decisions. This is akin to how humans acquire some skills (such as driving), where they first require careful thinking and coordination of actions and decisions but gradually become able to perform them without active thinking.

There is a clear distinction between language understanding and likelihood based text completion

As Yann LeCun, VP and chief AI scientist at Meta and award-winning deep learning pioneer, and Jacob Browning, a post-doctoral associate in the NYU Computer Science Department, wrote in a recent article, “A system trained on language alone will never approximate human intelligence, even if trained from now until the heat death of the universe.”

The two scientists note, however, that LLMs “will undoubtedly seem to approximate [human intelligence] if we stick to the surface. And, in many cases, the surface is enough.” [This is an extremely important point while applying these to a problem - i.e. are you operating at surface level or 5 levels deep]

Lets look at some examples from legal contract analysis. Consider the following sentence from a legal contract:

"The parties agree that any disputes arising under this agreement shall be resolved through binding arbitration."

An LLM trained on legal contract data could predict that the most likely next word after "arbitration" is "in accordance with the rules of the American Arbitration Association." This prediction is based on the fact that this language is commonly used in legal contracts to describe the process of binding arbitration.

Similarly, consider the following sentence:

"The Company may terminate this agreement upon written notice to the Contractor if the Contractor is in material breach of this agreement."

An LLM trained on legal contract data could predict that the most likely next word after "agreement" is "or if the Contractor becomes insolvent." This prediction is based on the fact that this language is commonly used in legal contracts to describe circumstances under which a contract may be terminated.

So, how should we be thinking about LLMs?

LLMs are an extremely powerful tool and we need to learn how to use them reliably to our advantage - safely and consistently. Here are a few real-world analogies to make it more contextual:

The LLM as a GPS for legal documents

Just as a GPS system uses data to provide you with turn-by-turn directions to your destination, an LLM can be thought of as a GPS for legal documents. It uses data from a large corpus of legal text to identify key terms and phrases, and then guides you through the document, highlighting important provisions and helping you to navigate complex legal language.

The LLM as a spellchecker for legal language

Finally, an LLM can be compared to a spellchecker for legal language. Just as a spellchecker uses a dictionary of words to identify misspellings and suggest corrections, an LLM uses a large corpus of legal text to identify patterns and suggest likely next words or phrases. This can help to catch errors or inconsistencies in legal documents, and ensure that they are written in clear, concise language.

It is important to note that LLMs do not have full language understanding. Rather, they make predictions based solely on the likelihood of words given the previous words in a sequence. This means that LLMs may generate text that is grammatically correct but could be semantically incorrect or nonsensical.

Understanding text requires you to have a preexisting mental model of the domain that the text talks about, and a way to *interpret* the text by mapping it onto your model. Both of these subproblems are independent from "use the past to predict what comes next".

ContractKen believes this is the true secret of making LLMs super useful to domains like legal contracts. We will be covering these topics in detail in upcoming posts. Stay tuned.

![Validate my RSS feed [Valid RSS]](valid-rss-rogers.png)

.png)